Complexity: High

Time required: 3-4 hours

Material required: prototypes, paper, pen

References: Service Design Tools, servicedesigntools.org

What is it for?

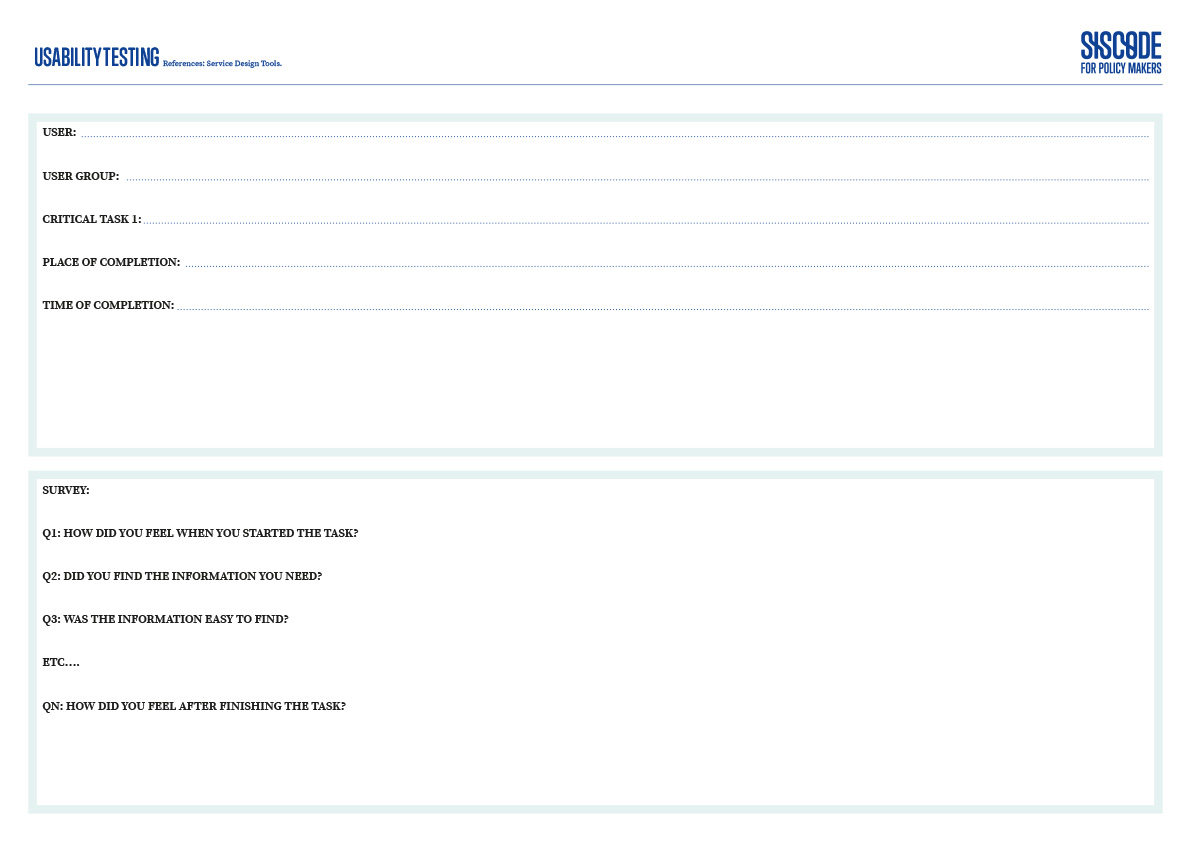

The first step is to understand what exactly you want to test (i.e. the mission critical tasks) and to gather a group of users to take part in the testing. The ideal conditions are allowing users to test the service/product in their natural settings (i.e. the context and moment when they actually use the service/product). If this is not possible, you can also conduct user testing in a more “laboratory” setting.

You will need first to identify your target audience, which should be comprised of at least one and ideally a groups of representative of each user group (e.g. beneficiaries, customers, donors, suppliers, partners, etc.).

The next step is to create the test script, which must be the same for each user type to make sure that evaluation is unbiased. The script should include the critical tasks to complete and a survey or rather list of questions to be answered by the user exactly after performing the task. The users should thus be asked to perform specific tasks and respond to the surveys immediately after and before performing other tasks. If evaluators are allowing users to test this in their natural environment, users should be made aware of the critical nature of responding to the survey directly following the completion of tasks.

When all of the tasks are completed, the team of evaluators should analyze the results and understand where the pain points are, what can be improved and if perhaps new features or even new solutions all together should be designed.

How to use it?

The initial heuristics used were made to evaluate user interfaces in computer software but based on the product at hand the following heuristics should be modified according to market research and requirements. For services, these could be based on touchpoints, i.e. the points along the service where the users and the organization “meet”.

There are three phases of heuristic evaluation. The ideal number of evaluators is five.

Phase 1: The Briefing Session in which the evaluators are told what to do. It is useful to prepare a specific document to either read off of or have the evaluators read on their own so that each evaluator is given the exact same information.

Phase 2: The Evaluation Period, in which the evaluators inspect on their own the product/service at least two times. During the first time, the evaluators become familiar with the process.

In the second run, the evaluator can stop and specific points and identify specific usability problems.

Evaluators can be given specific evaluation tasks. In some cases, it could also be useful to provide the evaluator a second person who writes down the problems encountered.

Phase 3: The Debriefing Session, in which the evaluators come together as a group to discuss their findings and brainstorm ways to fix any problems.

When setting up your own evaluation, the first step is to define your heuristics. Then evaluators must be chosen and the briefing document prepared.